If you are building AI price prediction models, having a high-quality e-commerce dataset is crucial to the accuracy of your model. This article will suggest the 5 best ways to collect an e-commerce dataset to optimize your AI price prediction model.

What Is an E-Commerce Dataset?

An e-commerce dataset is a dataset collected from e-commerce platforms such as Shopee, Lazada, TikTok Shop, Amazon, or eBay, fully reflecting transaction activities and buyer-seller behavior in the market. This helps businesses analyze market trends, evaluate competitive pricing between stores, optimize pricing strategies, and especially train AI models such as price prediction, demand forecasting, or recommendation systems.

E-commerce data fluctuates hourly, so owning a comprehensive, clean, and continuously updated e-commerce dataset has become an important foundation for building practical artificial intelligence (AI) solutions capable of large-scale development.

What Defines a High-Quality E-Commerce Dataset

An e-commerce dataset is only truly valuable when it provides you with a complete and accurate picture of the market, specifically meeting the following criteria:

- The dataset must contain all important information fields: Daily price history; sales, inventory, views, product attributes (color, size, classification, etc.); shop/seller information; review & rating data; promotional signals and flash sales.

- The data must be accurate and consistent: A common flaw in many datasets is missing, noisy, or inconsistent data across days. This causes the model to learn incorrect patterns. A quality dataset does not lose fields over time, identical values are always recorded in the same format, there is no duplicate or noisy data, and it is not skewed by scraping or source errors.

- Data must be continuously updated: Product prices on popular e-commerce platforms such as Shopee, Lazada, and TikTok Shop change constantly. If the data is not fresh (not updated daily or even hourly, without a long enough history for AI to “look back at the past,” without clear timestamps), the AI model is very likely to make incorrect predictions.

- The dataset must be deep enough for the model to learn complex patterns: Granularity is a critical factor. A sufficiently deep dataset will contain prices by SKU (not just by product), data broken down by sales campaigns, inventory fluctuations over time, and buyer behavior (ratings, reviews, keyword searches if available).

- The dataset must be scalable: For businesses providing data tools or AI, the dataset needs to be easily scalable when adding new marketplaces, new regions (VN, TH, ID…), expandable in terms of product quantity by industry, and update frequency.

Why High-Quality E-Commerce Datasets Matter for Price Prediction AI

A price prediction AI model is only truly effective when “fed” by a dataset with high depth, cleanliness, and update frequency. Businesses building AI platforms need a source of e-commerce data that is detailed enough for the model to learn market patterns.

A high-quality dataset helps AI capture the entire product lifecycle: when prices rise or fall, the reasons for fluctuations, the correlation between ratings, sales, and selling prices, or the impact of seller attributes on purchasing decisions. When data is missing fields, lacks history, or is updated slowly, the model will quickly become inaccurate and unable to reflect actual market behavior.

Therefore, owning a standardized e-commerce dataset is not only a competitive advantage but also the foundation for building reliable and commercially deployable AI products.

The Best Methods To Get An E-Commerce Dataset For Price Prediction AI Models

Each AI model requires different levels of detail, freshness, historical length, and data structure. Choosing the wrong collection method can lead to issues such as missing, biased, inconsistent, or insufficiently “fresh” data for the model to learn pricing patterns correctly.

Therefore, the following 5 methods for collecting e-commerce datasets are considered the most optimal strategies that businesses building price prediction AI products should consider. They not only help you understand which sources are best suited to your system, but also help you optimize costs, shorten deployment time, and ensure that the quality of the input e-commerce dataset meets the standards for the model to operate effectively.

- Buying Ready-Made E-Commerce Datasets from Trusted B2B Providers

Purchasing ready-made e-commerce datasets from reputable B2B providers is the fastest and safest option for businesses that need data to build AI price prediction models without investing in complex technical infrastructure.

This method is particularly suitable for AI platform providers that need raw, comprehensive data with a long history to deploy models in a short time.

The advantage of purchasing datasets is that the data is often standardized, clearly structured, and accompanied by metadata, making it easy for technical teams to integrate into processing pipelines without spending time cleaning it. Additionally, you can access large datasets from Shopee, Lazada, or TikTok Shop with a high level of detail that is often difficult to achieve through scraping in a short time.

However, businesses also need to carefully consider the scope of the data, the update frequency, and the vendor’s reliability before purchasing. A reputable provider needs to ensure accurate data, complete information fields (price, inventory, sales, ratings, seller info, etc.), provide samples for review, and support regular updates as needed.

- Using E-Commerce Data Scraping Techniques

When businesses develop price prediction AI models that require higher-quality data than traditional datasets, e-commerce data scraping techniques are the best choice.

E-commerce data scraping techniques excel over other solutions in the following ways: they can flexibly collect data from marketplaces with rapid price fluctuations and the ability to accurately reflect market behavior (Shopee, Lazada, TikTok Shop, eBay, Amazon, etc.), real-time capability, and deep customization according to the characteristics of the price prediction models.

To develop professional price prediction AI models, businesses can consider choosing one of two options: building their own data scraping pipeline or using e-commerce data scraping services of third-party providers.

Build or buy: an e-commerce data scraping solution?

| Build your own data scraping pipeline | Buying e-commerce data scraping services | |

|---|---|---|

| Advantages | – Full control over data – Internal security – Optimized for your own AI framework |

– Time savings – Pre-optimized infrastructure – Consistent data and easy integration |

| Disadvantages | – Requires significant technical resources – Difficult to maintain when the platform changes structure – Requires complex CAPTCHA & anti-bot systems – Long deployment time |

– Dependent on vendor – Potential limitations on customization |

From the comparison table, it can be seen that building your own data scraping pipeline is only suitable for businesses with strong technical resources and large budgets. However, for most companies developing price prediction AI models and data tools, the most important factors are deployment speed, scalability, and data stream stability. Therefore, most choose to use e-commerce data scraping services from professional providers instead of building their own.

Among experienced providers, Easy Data is a noteworthy option due to its ability to collect large-scale raw e-commerce data from Shopee, Lazada, and TikTok Shop. With flexible customization and the ability to provide both historical and real-time data, Easy Data helps businesses shorten operational time and focus on the most important part: building highly accurate AI models with long-term scalability.

- Using E-Commerce Data Tools (API Providers)

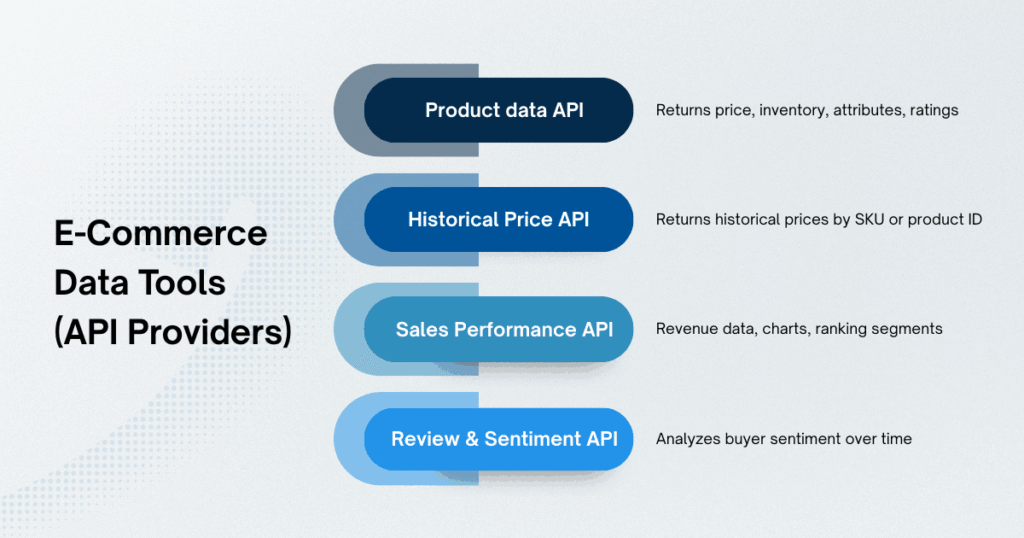

Another quick and effective method to obtain an e-commerce dataset is to use specialized API tools built for e-commerce data, such as:

- Product data API: returns price, inventory, attributes, ratings.

- Historical Price API: returns historical prices by SKU or product ID.

- Sales Performance API: revenue data, charts, ranking segments.

- Review & Sentiment API: analyzes buyer sentiment over time.

These data tools will help you access e-commerce data in real time without having to build any complex data collection systems. Simply call the API to get immediate, consistent, non-duplicative data with no missing important fields. In addition, price and revenue history is also fully stored by API providers, enabling deeper learning about market volatility behavior. By standardizing data structures, API providers help businesses easily integrate data into AI dashboards, BI systems, or price prediction pipelines without spending time reprocessing data.

However, there are many such tools on the market today, so it is important to find and select high-quality data tools to achieve the desired results. Below are criteria to help businesses choose good e-commerce data tools to optimize AI price prediction models:

- Historical depth ≥ 6–12 months

- Detail level at the SKU level rather than the single product level

- High update frequency (daily or hourly)

- Consistent data schema

- Clear error handling and logical retry logic

- Complete API documentation + e-commerce dataset sample

- Support for multiple markets in a single endpoint

- Crowdsourced Data + Public E-Commerce Data Dumps

This method is often chosen by research teams, small startups, or experimental projects because it is low-cost but still provides a good enough e-commerce dataset to start training models.

Public data sources such as Kaggle, Google Dataset Search, GitHub repositories, or datasets published by universities provide a diverse amount of information (including product data, sample price history, user price reviews, and many other useful metadata).

However, the biggest limitation of this method is that it does not provide real-time data, lacks historical timelines, and it is difficult to ensure consistency between information fields. In addition, many public datasets are not updated on new markets such as TikTok Shop or rapidly growing industries.

Overall, this low-cost method only provides value to businesses that need an e-commerce dataset to test an initial AI price prediction model; it is completely unsuitable for developing a sophisticated AI price prediction model.

- Hybrid Method: Vendor Dataset + Custom Scraping

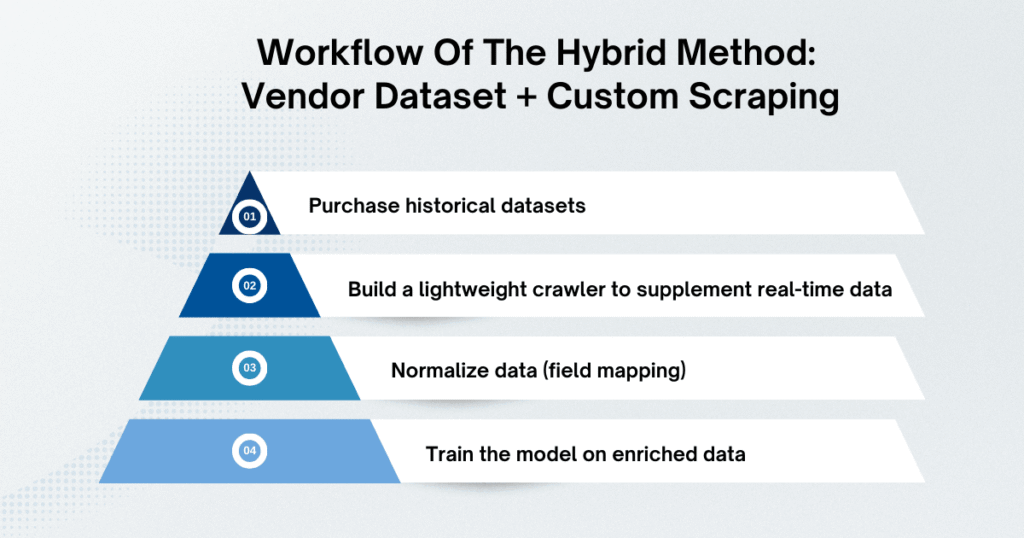

Many businesses developing specialized AI price prediction models are choosing a hybrid approach that combines purchasing e-commerce datasets from vendors with building their own pipelines. This hybrid approach helps businesses strike a balance between implementation costs and the quality of results obtained.

The implementation process of the hybrid method: vendor dataset + custom scraping:

- Purchase historical datasets

- Build a lightweight crawler to supplement real-time data

- Normalize data (field mapping)

- Train the model on enriched data

With this process, businesses will own an e-commerce dataset with fairly good depth, easily scalable to multiple industries and markets, and businesses will have easy control and customization of the data, but on the condition that they accept the considerable cost of building the pipeline and a professional technical team to maintain it.

Conclusion

Building an accurate, stable, and scalable AI price prediction model directly depends on the quality of the e-commerce dataset that the business uses. Each data collection method has its own advantages and disadvantages, and the appropriate choice will depend on the business’s goals, resources, and desired implementation speed.

Leave a Reply