Using an ecommerce web scraper to extract product or pricing data is often the first step teams take when working with marketplace data. However, as data needs scale, scraping quickly stops being a scripting problem and becomes a pipeline design challenge.

This article focuses on how to build a reliable data pipeline around an ecommerce web scraper (from ingestion to analytics-ready datasets). Rather than explaining how to write scraping code, it examines how technical teams design, operate, and maintain scraping-based pipelines that remain stable over time.

What a Data Pipeline Means in an Ecommerce Context

In ecommerce environments, a data pipeline is not just a sequence of extraction jobs. It is a repeatable system that continuously converts volatile marketplace pages into structured, analyzable data. The key distinction is simple:

- Scraping jobs retrieve data once or sporadically

- Data pipelines ingest, validate, store, and refresh data continuously

Because ecommerce platforms change frequently (layouts, category structures, attributes), a pipeline must be designed to tolerate instability while preserving analytical consistency.

Where an Ecommerce Web Scraper Fits in the Pipeline Architecture

Before building anything, it is critical to place the ecommerce web scraper in the correct architectural role within the overall data pipeline.

High-Level Pipeline Architecture

A typical ecommerce data pipeline consists of four layers:

- Ingestion layer – Collects raw data from marketplaces

- Processing layer – Cleans, normalizes, and deduplicates data

- Storage layer – Persists raw and processed datasets

- Consumption layer – Feeds BI tools, models, and reports

The scraper belongs exclusively to the ingestion layer.

The Role of an Ecommerce Web Scraper

An ecommerce scraper is responsible for acquiring raw marketplace signals at the point of entry into the pipeline. Its core responsibilities include:

- Accessing publicly available marketplace data

- Capturing raw HTML or structured responses

- Preserving source context (URLs, timestamps, identifiers)

It should not handle business logic, aggregation or analytical metrics.

Confusing these responsibilities often leads to brittle systems that are hard to maintain.

Scrapers Compared to Other Ingestion Methods

In practice, an ecommerce web scraper behaves very differently from APIs or managed data feeds at the ingestion layer. Scrapers provide high control and flexibility, but introduce greater exposure to structural changes, data gaps, and maintenance overhead.

These characteristics directly influence pipeline design choices (such as retry logic, schema validation, and monitoring depth), that are less critical when working with APIs or pre-collected datasets. Understanding these trade-offs helps teams design pipelines that account for the inherent instability of scraped data rather than treating ingestion as a black box.

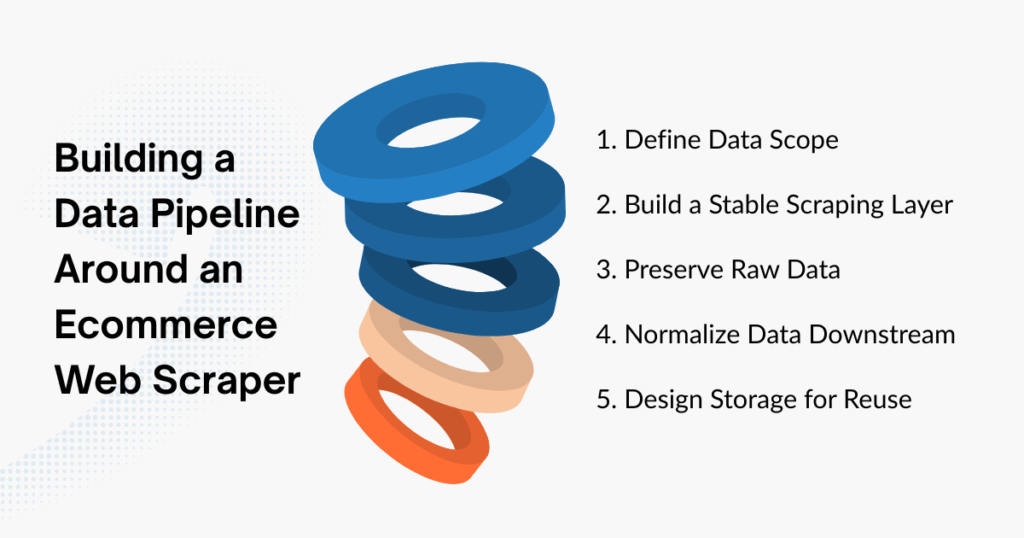

Step-by-Step: Building a Data Pipeline Around an Ecommerce Web Scraper

This section explains how technical teams actually design pipelines around scraping components.

Step 1: Define Data Scope Before Scraping

Pipeline reliability starts with scope definition, not extraction logic. Key decisions include:

- Which marketplaces and regions to cover

- Which entities matter (products, sellers, categories)

- Update frequency (daily, weekly, near-real-time)

These decisions determine crawl depth, scheduling, and downstream storage requirements. Scraping without a clear scope often results in bloated datasets that are expensive to maintain and difficult to analyze.

Step 2: Design the Scraping Layer for Stability, Not Speed

Speed optimization is rarely the correct first goal. Best practices for the scraping layer include:

- Controlled request pacing instead of burst crawling

- Retry mechanisms with backoff strategies

- Isolation of scraper failures from downstream processing

A well-designed ecommerce web scraper prioritizes consistent access over maximum throughput, producing fewer gaps and less silent data loss.

Step 3: Capture Raw Data Without Premature Transformation

Raw data should remain raw. Effective pipelines:

- Store original responses or parsed raw fields

- Avoid early aggregation or filtering

- Preserve source-level identifiers and timestamps

This approach allows downstream teams to reprocess historical data when schemas change, rather than re-scraping entire markets.

Step 4: Normalize and Structure Data Downstream

Normalization belongs outside the scraper. Common processing steps include:

- Deduplicating products across categories and sellers

- Mapping variants and SKUs into stable hierarchies

- Standardizing attributes and category paths

Separating ingestion from normalization makes pipelines easier to debug and extend as business questions evolve.

Step 5: Store Data for Reuse, Not Just Querying

Storage decisions shape how data can be reused. Best practices include:

- Separating raw and processed tables

- Partitioning by marketplace and time

- Designing schemas that tolerate attribute evolution

Well-structured storage ensures scraped data remains useful beyond its initial analysis.

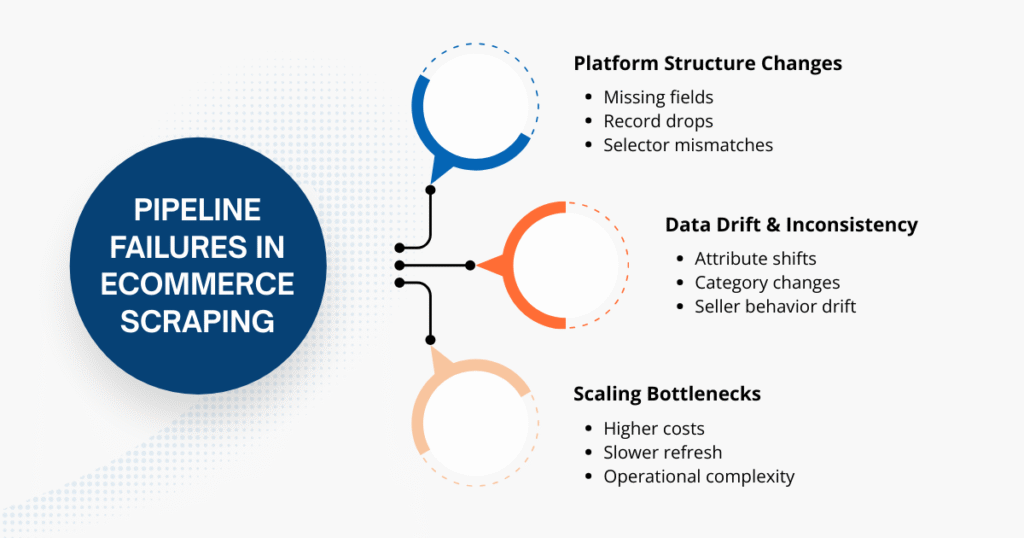

Handling Common Pipeline Failures in Ecommerce Scraping

Even well-designed pipelines encounter failure modes unique to ecommerce data.

Platform Structure Changes

Marketplace HTML and DOM structures change frequently. Pipelines must detect:

- Missing fields

- Sudden drops in record counts

- Selector mismatches

Monitoring data volume and schema integrity is often more effective than monitoring scraper logs alone.

Data Drift and Inconsistency

Over time, product attributes, category definitions, and seller behavior shift. Without normalization strategies, datasets drift silently, undermining longitudinal analysis.

Versioning and attribute auditing help preserve analytical trust.

Scaling Challenges

What works for thousands of products often fails at millions. Scaling introduces:

- Higher infrastructure costs

- Longer refresh cycles

- Greater operational overhead

At this stage, scraping becomes less of a tool problem and more of an infrastructure concern.

When Teams Move Beyond Scrapers to Data Infrastructure

As ecommerce data pipelines mature, scraping often shifts from an exploratory activity to an operational responsibility. At scale, maintaining stable ingestion across frequent platform changes, large product volumes, and long time horizons can consume disproportionate engineering effort.

In response, some teams continue managing scrapers internally, while others externalize parts of the ingestion layer and focus internal resources on data modeling, analysis, and decision-making. In these cases, managed data infrastructure (such as large-scale marketplace data pipelines maintained through continuous scraping) can complement internal systems rather than replace them.

Providers like Easy Data illustrate this model by supporting ingestion reliability and historical coverage, allowing teams to retain analytical flexibility while reducing operational burden, particularly for workflows involving Shopee data scraping.

Conclusion

An ecommerce web scraper remains a powerful entry point to marketplace data. However, long-term value emerges only when scraping is embedded within a thoughtfully designed data pipeline.

Successful teams focus less on extraction tricks and more on architecture, governance, and maintainability. When built correctly, scraping becomes a stable foundation for market intelligence, not a recurring technical liability.

Leave a Reply