Automated web scraping is often the turning point between occasional data collection and a truly data-driven strategy. Manual scraping may work for quick checks, but it rarely scales. The problem is not whether automation is possible, it’s whether it’s implemented in a way that supports real decisions instead of creating silent data chaos.

If you’re considering automation, this guide focuses on how to implement it correctly (not as a coding exercise, but as a structured operational capability).

What Automated Web Scraping Really Means

Many teams assume automated web scraping simply means “running a script every day.” In practice, that definition is too shallow and often dangerous. Automation is not about frequency, it is about consistency, reliability, and repeatability.

True automated web scraping ensures that:

- The same data fields are collected each time

- The same marketplace conditions are observed consistently

- The output structure remains stable over time

Without these elements, increasing frequency only increases noise.

One of the biggest misconceptions teams see is confusing automation with scale. You can run a scraper every hour and still produce unusable data if SKU mapping changes, price formats shift, or campaign mechanics distort the output. Automation is not speed, automation is structured continuity.

For teams applying web scraping for ecommerce, this distinction becomes even more critical because marketplace dynamics amplify small structural inconsistencies over time.

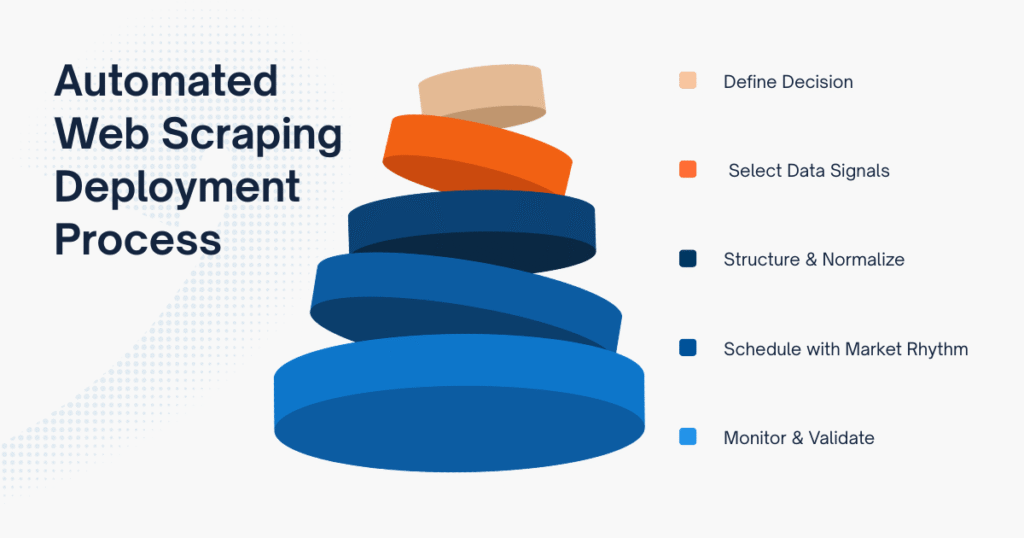

How To Implement Automated Web Scraping: Step-by-Step Guide

Knowing what automation means is one thing. Implementing automated web scraping without creating hidden complexity is another. Many teams automate too early, too broadly, or without structural discipline and only realize months later that the data cannot be trusted. The steps below are designed to prevent that outcome.

Step 1: Define the Decision the Automation Supports

Before touching tools or infrastructure, ask a simple but uncomfortable question: What business decision will this automated web scraping system support? Automation is justified when:

- Pricing decisions depend on daily competitor movement

- Category saturation needs weekly tracking

- Campaign volatility must be monitored in real time

It is not justified when:

- The data is only reviewed quarterly

- The insights are exploratory and temporary

A common mistake is automating data collection before validating that the insight actually drives action. This often results in beautifully structured datasets that nobody uses. Automation should follow decision clarity, not the other way around.

Step 2: Select Data Sources and Signals

Once the decision is clear, the next step is defining what should actually be automated. In ecommerce marketplaces, not every visible element deserves scraping. Effective automated web scraping focuses on:

- Core price fields (base vs promotional)

- Seller identity and entry patterns

- Listing counts and ranking shifts

- Stock availability signals

Trying to scrape “everything” increases complexity and failure points. In practice, signal clarity matters more than data volume.

Step 3: Design a Repeatable Data Structure

This is where many automation projects quietly break. Automation without structured normalization leads to “data drift” (where datasets look complete but cannot be compared across time). Before running automated web scraping at scale, define:

- SKU mapping logic

- Price normalization rules

- Seller identification consistency

- Campaign flag handling

Without this foundation, even a technically perfect scraper produces unreliable analysis. In real ecommerce environments (especially campaign-driven marketplaces), structural consistency matters more than crawling speed.

Step 4: Schedule with Market Behavior in Mind

Timing matters more than most teams expect. Automated web scraping should align with how marketplaces behave:

- Daily during high-volatility campaign windows

- Weekly for structural category shifts

- More frequently before mega sales events

Scraping only during campaign spikes exaggerates volatility. Scraping randomly introduces noise. Effective automation is rhythm-based, not arbitrary.

In Southeast Asia marketplaces, for example, campaign cycles dramatically distort short-term price visibility. This is why experienced teams often design scraping schedules around marketplace calendars rather than fixed internal intervals.

Step 5: Monitor, Validate, and Adjust

The biggest myth about automated web scraping is that once it runs, it runs forever. In reality, automation requires ongoing monitoring because:

- Marketplace layouts evolve

- Campaign mechanics change

- Anti-bot defenses adjust

- Data fields shift subtly

These changes rarely trigger obvious system errors. Instead, they create silent inconsistencies. You need:

- Validation checks

- Historical comparison alerts

- Periodic manual audits

Automation is not “set and forget”, it is “design, monitor, refine”.

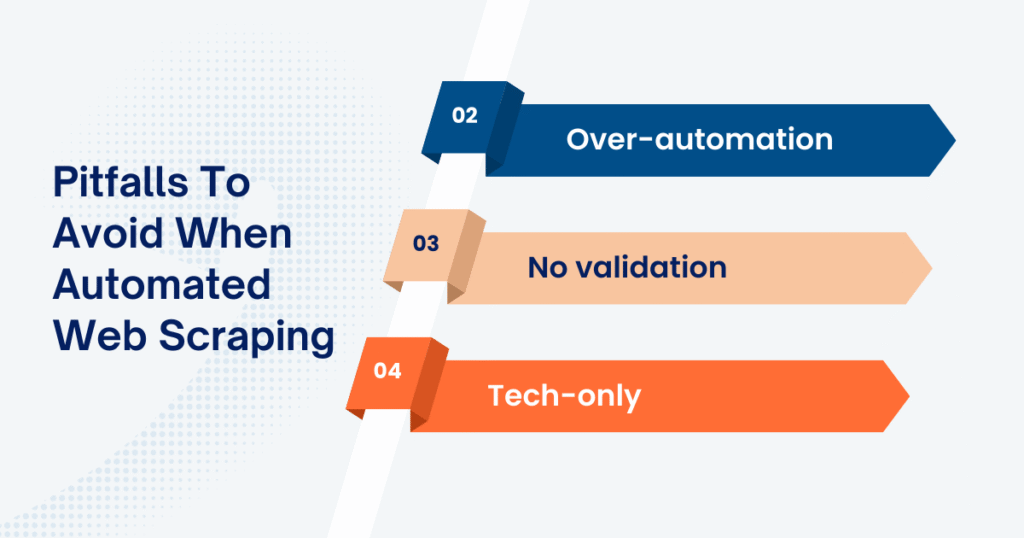

Common Automation Pitfalls in Ecommerce Scraping

After working across multiple ecommerce environments, these patterns repeat consistently:

- Over-automation: Automating exploratory data too early creates unnecessary overhead. Start small, validate insight value, then scale.

- Ignoring data validation: Automation increases confidence (sometimes falsely). Without validation layers, errors compound over time.

- Treating automation as a technical task only: Automated web scraping is often delegated entirely to engineering teams. But without business alignment, the output lacks decision context.

In practice, when companies scale automation across marketplaces like Shopee, TikTok Shop or Lazada, the real challenge is not crawler uptime, it is maintaining structured, validated, and comparable data over months or years. That is why professional ecommerce data scraping services often focus more on normalization discipline and monitoring systems than on crawling speed itself.

Automation becomes powerful only when it is designed around marketplace behavior and long-term analytical continuity.

Conclusion

Automated web scraping is about building a reliable data engine that supports repeatable, confident decisions. When implemented with clear decision alignment, structured normalization, and rhythm-based scheduling, automation transforms marketplace signals into strategic advantage. When rushed or overbuilt, it creates hidden complexity.

The difference is rarely in the tool, it is in the design discipline behind it. If automation is the next step in your data strategy, approach it as infrastructure, not as an experiment.

Leave a Reply