Introduction: The Power of Web Scraping YouTube Comments with Python

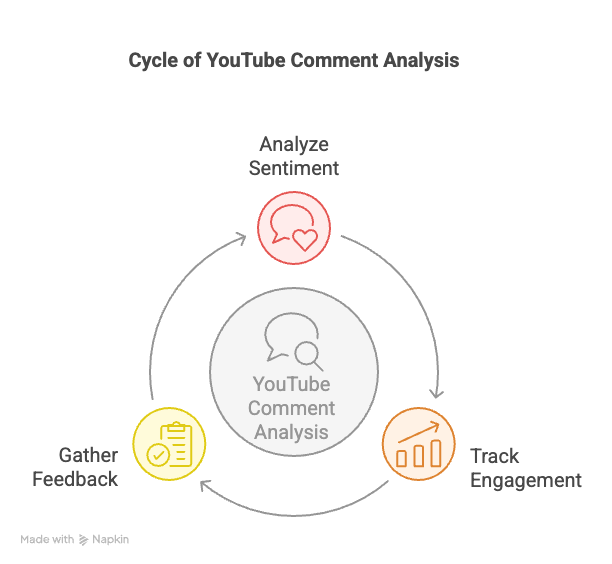

YouTube comments provide a wealth of data that can be used for various purposes like sentiment analysis, customer feedback, and engagement tracking. Web scraping YouTube comments with Python is a powerful method for automating the extraction of this valuable data. By using Python and its robust libraries, you can easily scrape comments, analyze user feedback, and track trends related to specific videos or channels. In this article, we’ll explore how to effectively scrape YouTube comments using Python, from setting up the necessary tools to handling complex data.

What is Web Scraping YouTube Comments with Python?

Web scraping YouTube comments with Python involves using Python scripts and libraries to extract comments from YouTube videos. This process typically uses Python’s requests library to send HTTP requests to the YouTube server, BeautifulSoup to parse the HTML of the video page, or the YouTube Data API to access data in a more structured manner.

By scraping YouTube comments, businesses, marketers, and researchers can:

- Analyze public sentiment about a video, product, or brand.

- Track engagement metrics and identify popular trends.

- Gather feedback for market research or competitive analysis.

Python, with its powerful libraries, is the most efficient and widely used programming language for web scraping tasks.

How to Web Scrape YouTube Comments with Python

1. Set Up Your Python Environment

Before you begin, make sure you have Python installed on your computer. You will also need to install the necessary libraries:

bashCopypip install google-api-python-client

pip install requests

pip install beautifulsoup4

These libraries allow you to interact with the YouTube Data API, send requests to websites, and parse the HTML content of YouTube video pages.

2. Using the YouTube Data API to Extract Comments

The YouTube Data API is the most structured and reliable way to extract comments from YouTube. Here’s a basic setup to scrape comments from YouTube using the API.

2.1. Create a Google Developer Account and API Key

- Go to the Google Developer Console.

- Create a new project.

- Enable the YouTube Data API v3.

- Create an API key to authenticate your requests.

2.2. Python Script to Extract Comments

Once you have your API key, you can use the following Python code to extract comments for a specific YouTube video:

pythonCopyfrom googleapiclient.discovery import build

# API key from Google Developer Console

api_key = "YOUR_API_KEY"

# YouTube API client

youtube = build("youtube", "v3", developerKey=api_key)

# Function to get comments for a specific video

def get_comments(video_id):

comments = []

request = youtube.commentThreads().list(

part="snippet",

videoId=video_id,

textFormat="plainText"

)

response = request.execute()

for item in response['items']:

comment = item['snippet']['topLevelComment']['snippet']['textDisplay']

comments.append(comment)

return comments

# Call the function and provide the video ID

video_id = "VIDEO_ID" # Replace with the YouTube video ID

comments = get_comments(video_id)

for comment in comments:

print(comment)

This script retrieves the top-level comments of a given YouTube video by its ID. You can easily extend it to include replies or scrape additional pages of comments.

3. Using Selenium and BeautifulSoup for Web Scraping

If you prefer scraping directly from the YouTube webpage instead of using the API, Selenium and BeautifulSoup can help you achieve that. Here’s a basic setup:

3.1. Setting Up Selenium

To scrape YouTube comments using Selenium, you’ll need the Chrome WebDriver:

- Install Selenium and ChromeDriver:

bashCopypip install selenium

- Download the appropriate version of ChromeDriver based on your version of Chrome.

3.2. Python Script for Scraping YouTube Comments with Selenium

pythonCopyfrom selenium import webdriver

from bs4 import BeautifulSoup

import time

# Set up Selenium WebDriver

driver = webdriver.Chrome(executable_path='/path/to/chromedriver') # Replace with your path

# Open the YouTube video

url = "https://www.youtube.com/watch?v=VIDEO_ID" # Replace with the YouTube video URL

driver.get(url)

# Wait for comments to load

time.sleep(5) # Adjust the time if necessary

# Parse the page source with BeautifulSoup

soup = BeautifulSoup(driver.page_source, 'html.parser')

# Extract comments

comments = soup.find_all('yt-formatted-string', {'id': 'content-text'})

# Print the comments

for comment in comments:

print(comment.text)

# Close the WebDriver

driver.quit()

This method simulates a browser session, allowing you to load dynamic content like comments. The BeautifulSoup library is used to extract and process the comments from the HTML page.

4. Handle Pagination and Infinite Scroll

YouTube often loads comments dynamically as you scroll down the page. To scrape all comments, you can simulate scrolling with Selenium to load additional comments. You can also use the YouTube API to handle pagination by using the nextPageToken parameter.

5. Clean and Analyze the Data

Once you’ve scraped the comments, you’ll need to clean and process the data. For example, you may want to remove duplicate comments or analyze sentiment. Python libraries like Pandas and TextBlob can be used for data cleaning and sentiment analysis:

pythonCopyimport pandas as pd

from textblob import TextBlob

# Convert comments to DataFrame for analysis

df = pd.DataFrame(comments, columns=["Comment"])

# Sentiment analysis

df['Sentiment'] = df['Comment'].apply(lambda x: TextBlob(x).sentiment.polarity)

# Display the first few comments with sentiment score

print(df.head())

This allows you to assess the sentiment of comments (positive, negative, or neutral) and gain insights into how users feel about a particular video or topic.

Legal and Ethical Considerations

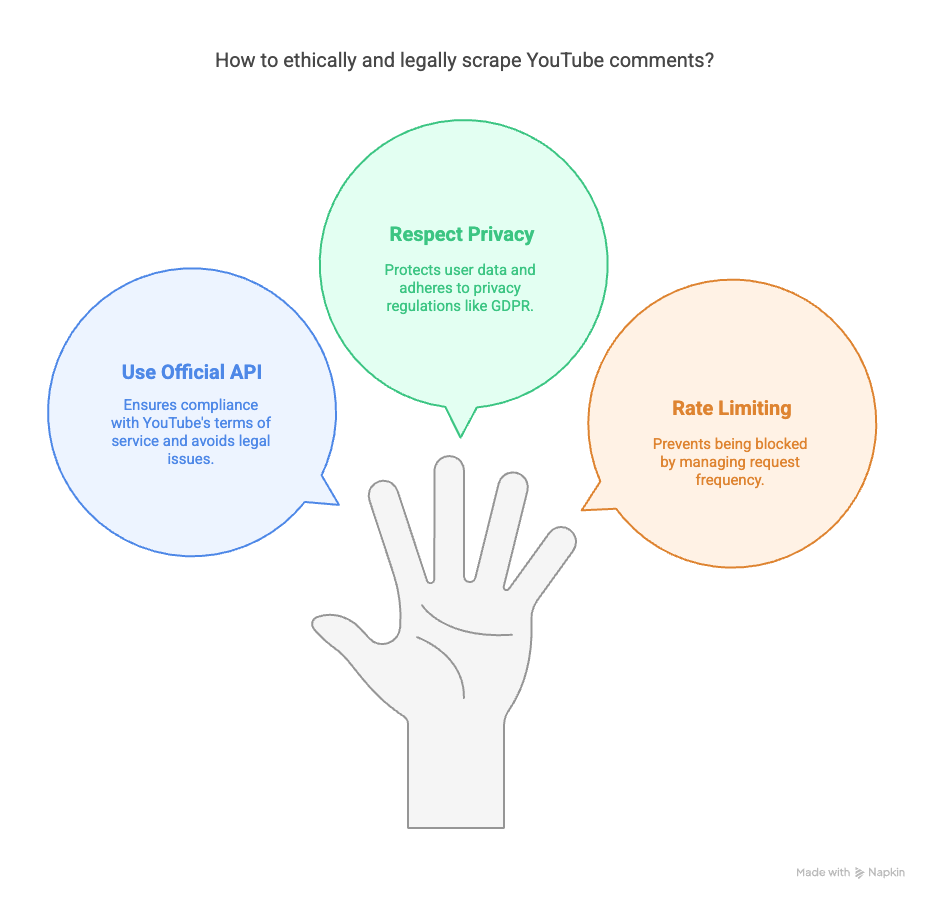

While scraping YouTube comments is useful, be sure to follow ethical and legal guidelines:

- API Usage: Always use the official YouTube API when possible, as scraping directly from the website may violate YouTube’s terms of service.

- Respect Privacy: Avoid collecting personally identifiable information (PII) from comments and adhere to privacy regulations such as GDPR.

- Rate Limiting: To avoid being blocked, ensure your scraper is not sending excessive requests to YouTube’s servers.

Conclusion: Unlock the Power of YouTube Comments with Python

Web scraping YouTube comments with Python allows businesses, researchers, and developers to automate the process of collecting user feedback, tracking engagement, and analyzing sentiment. By using tools like the YouTube Data API, Selenium, and BeautifulSoup, you can efficiently extract and analyze comments to gain valuable insights into public opinion. Whether you’re tracking video performance, gathering market feedback, or conducting sentiment analysis, Python offers a powerful framework for scraping YouTube comments.

For more information on how Easy Data can help with your web scraping needs, visit EasyData.io.vn.

External Links

Leave a Reply