Introduction:

Web scraping has become an essential tool for businesses looking to collect, analyze, and leverage data for decision-making. Whether you’re tracking competitor pricing, extracting customer reviews, or monitoring eCommerce trends, scraping allows businesses to gather valuable insights from websites.

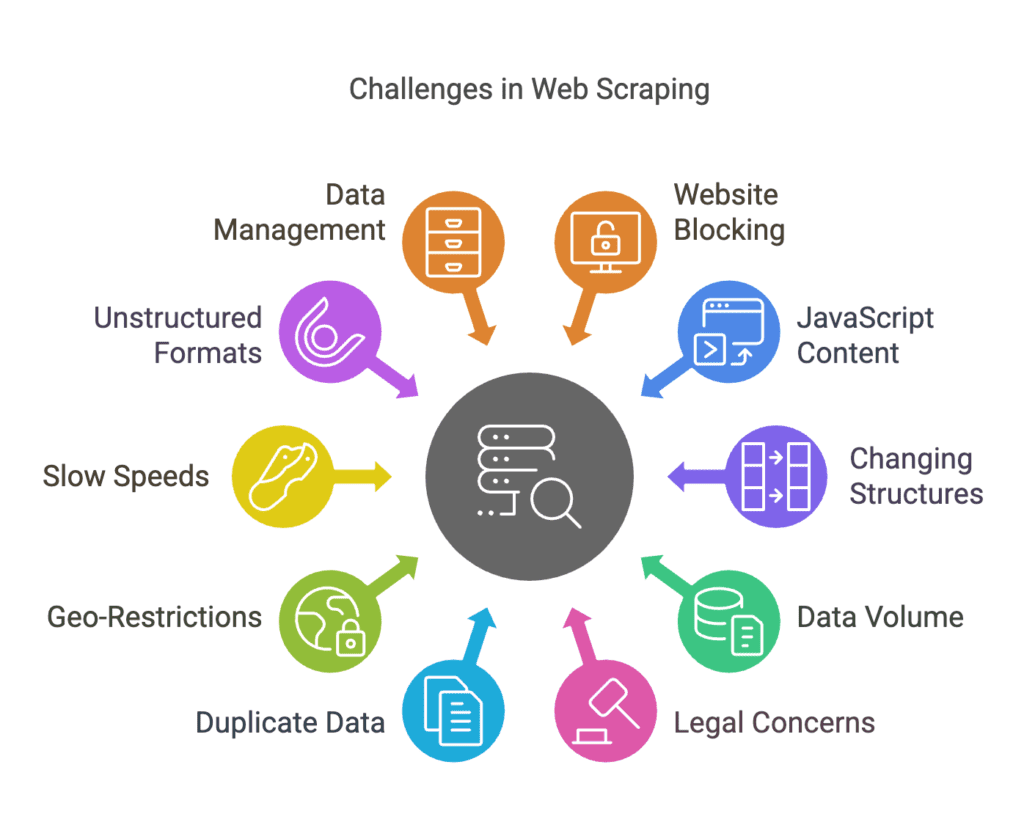

However, web scraping isn’t always smooth sailing. Many businesses face technical, legal, and ethical challenges that can disrupt data extraction efforts. Websites have implemented anti-scraping measures, and handling large-scale data can be a complex task.

In this guide, we’ll explore 10 common web scraping problems, why they happen, and how businesses can solve them efficiently. We’ll also provide solutions, tools, and best practices to ensure your web scraping projects run smoothly and legally.

For a hassle-free, fully automated web scraping solution, check out Easy Data—a trusted provider of custom data extraction services.

- Introduction:

-

10 Common Web Scraping Problems & How to Fix Them

- 1. Websites Blocking Scrapers (403 Forbidden, CAPTCHAs, IP Bans)

- 2. JavaScript-Rendered Content (Dynamic Websites)

- 3. Changing Website Structure

- 4. Handling Large Volumes of Data

- 5. Legal & Ethical Concerns

- 6. Avoiding Duplicate Data

- 7. Geo-Restricted Content

- 8. Slow Scraping Speeds

- 9. Extracting Data in Unstructured Formats

- 10. Managing Scraped Data Efficiently

- How Easy Data Solves Web Scraping Problems

- Final Thoughts: Overcoming Web Scraping Problems for Business Growth

10 Common Web Scraping Problems & How to Fix Them

1. Websites Blocking Scrapers (403 Forbidden, CAPTCHAs, IP Bans)

Many websites use bot-detection systems that block scrapers after multiple requests. These systems detect suspicious activity and restrict access using:

❌ IP bans that prevent repeated access

❌ CAPTCHAs to verify human presence

❌ 403 Forbidden errors for unauthorized access

🔎 Solution:

✔ Use rotating proxies & user agents to avoid detection

✔ Implement CAPTCHA-solving services like 2Captcha

✔ Scrape during off-peak hours to reduce server load

💡 Pro Tip: Easy Data provides IP rotation and CAPTCHA bypass services to ensure uninterrupted data collection.

2. JavaScript-Rendered Content (Dynamic Websites)

Some websites load data dynamically using JavaScript frameworks (React, Angular, Vue.js), making standard HTML scrapers ineffective.

🔎 Solution:

✔ Use headless browsers like Selenium or Puppeteer to execute JavaScript

✔ Extract data from XHR/Fetch requests instead of parsing static HTML

✔ Check for an official API as an alternative to scraping

💡 Pro Tip: Google Puppeteer is great for scraping JavaScript-heavy websites.

3. Changing Website Structure

A scraper that works today may break tomorrow due to HTML structure updates. Even minor code changes can disrupt data extraction.

🔎 Solution:

✔ Use XPath & CSS Selectors that are more resilient to layout changes

✔ Monitor website changes with automated alerts

✔ Choose AI-driven scrapers that adapt to structural changes

💡 Pro Tip: Easy Data offers auto-adaptive scraping that detects website changes and adjusts extraction scripts in real time.

4. Handling Large Volumes of Data

Scraping millions of pages requires proper handling of data storage, rate limiting, and processing speeds.

🔎 Solution:

✔ Use asynchronous scraping to process multiple requests at once

✔ Store data in cloud-based databases

✔ Optimize scripts for multi-threading & parallel processing

💡 Pro Tip: AWS S3 is great for storing large-scale scraped data.

5. Legal & Ethical Concerns

Scraping public data is generally legal, but collecting protected or personal data can violate terms of service and privacy laws (GDPR, CCPA).

🔎 Solution:

✔ Always check robots.txt before scraping (robots.txt checker)

✔ Avoid scraping login-protected data

✔ Use official APIs when available

💡 Pro Tip: Many platforms like Twitter API offer legal alternatives to scraping.

6. Avoiding Duplicate Data

Large-scale scraping often results in duplicate records, leading to misleading analysis.

🔎 Solution:

✔ Use unique identifiers (SKUs, IDs) to filter duplicates

✔ Store data in structured formats (SQL, JSON)

✔ Implement data deduplication techniques

💡 Pro Tip: Use Pandas Library in Python for duplicate detection & cleanup (Learn More).

7. Geo-Restricted Content

Some websites display different data based on location (e.g., different prices in different countries).

🔎 Solution:

✔ Use geo-targeted proxies

✔ Implement VPN-based scraping

✔ Scrape from multiple geographic locations

💡 Pro Tip: Bright Data offers residential & geo-targeted proxies for scraping location-based content.

8. Slow Scraping Speeds

Sending too many requests too quickly can overload your scraper or trigger IP bans.

🔎 Solution:

✔ Implement rate limiting & request delays

✔ Use asynchronous requests

✔ Optimize scripts for efficiency

💡 Pro Tip: Easy Data provides high-speed scraping solutions.

9. Extracting Data in Unstructured Formats

Some websites store data in complex formats like hidden fields, nested elements, or JSON scripts.

🔎 Solution:

✔ Use Regex to extract text patterns

✔ Parse JSON responses from API calls

✔ Use AI-powered extraction tools

💡 Pro Tip: Check out JSONPath for extracting nested data structures.

10. Managing Scraped Data Efficiently

Handling millions of scraped records requires proper indexing, storage, and retrieval.

🔎 Solution:

✔ Store data in scalable cloud databases

✔ Use ETL pipelines for processing

✔ Implement real-time data pipelines

💡 Pro Tip: Google BigQuery is great for analyzing large scraped datasets.

How Easy Data Solves Web Scraping Problems

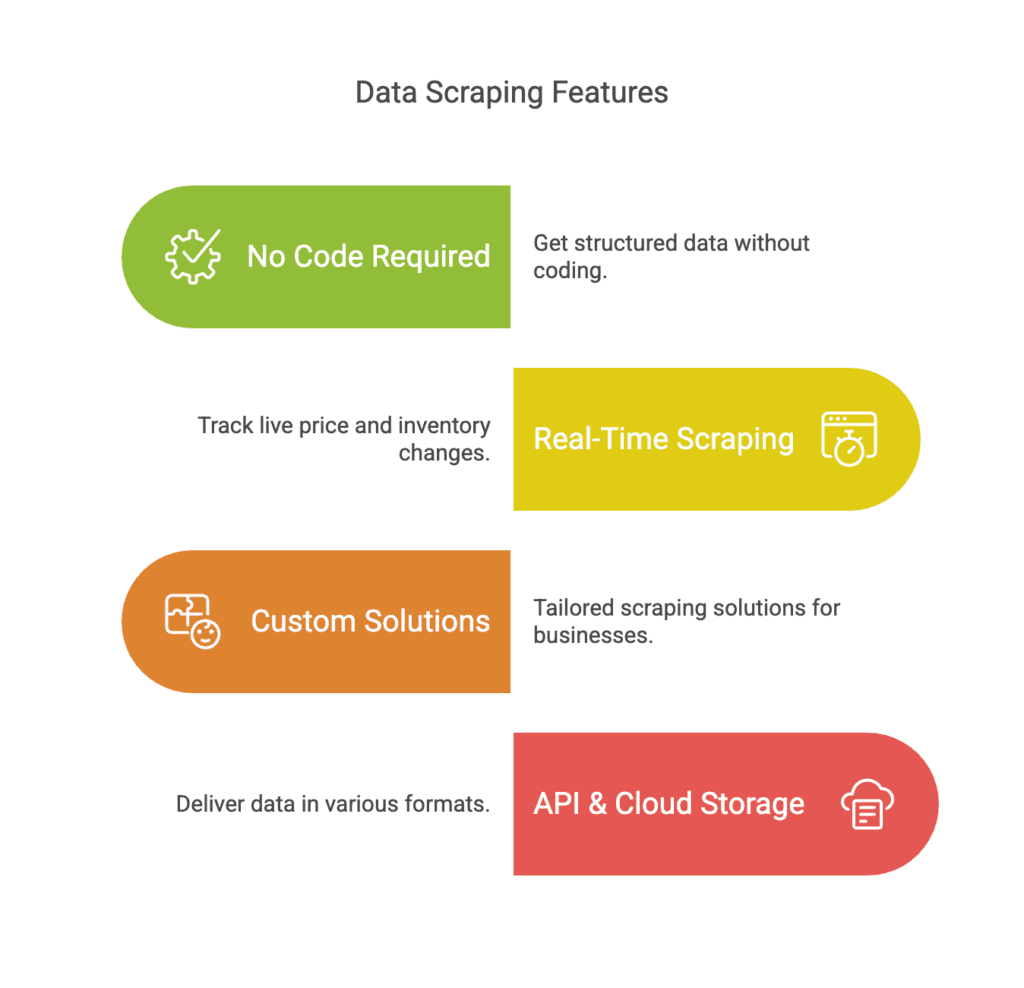

Instead of troubleshooting issues manually, businesses can use Easy Data—a fully automated web scraping service designed to handle these challenges efficiently.

Why Choose Easy Data?

🚀 No Code Required – Get ready-to-use, structured data

📊 Real-Time Scraping – Track live price & inventory changes

🔄 Custom Scraping Solutions – Tailored to your business needs

💾 API & Cloud Storage – Deliver data in CSV, JSON, or database formats

✅ Want hassle-free web scraping? Contact Easy Data today.

Final Thoughts: Overcoming Web Scraping Problems for Business Growth

Web scraping offers powerful business insights, but technical, ethical, and operational challenges can slow you down. By implementing the right solutions, automation tools, and ethical scraping practices, businesses can extract high-quality data seamlessly.

📩 Need web scraping solutions? Contact Easy Data today!

Leave a Reply